AI Governance 2024

AI governance refers to the policies, frameworks, and processes that ensure the responsible and ethical development, deployment, and use of artificial intelligence technologies. As AI becomes increasingly integrated into various sectors such as healthcare, finance, and transportation, it raises complex ethical, legal, and operational concerns. AI Governance Market Trends are showing a growing demand for robust frameworks that can address issues like data privacy, algorithmic bias, accountability, transparency, and fairness. As AI systems are entrusted with decision-making processes that impact human lives, it is crucial to establish governance mechanisms to ensure these technologies are used responsibly and ethically.

At its core, AI governance aims to create a balanced approach that fosters innovation while mitigating the risks associated with AI technologies. This includes ensuring that AI systems are developed with high standards of security, transparency, and ethical considerations. Effective governance frameworks also provide organizations with clear guidelines on how to manage AI projects, avoid discriminatory practices, and ensure compliance with regulatory standards. As AI technology continues to evolve, so too must the governance structures that oversee it, making this an ever-important area of focus for businesses and governments alike.

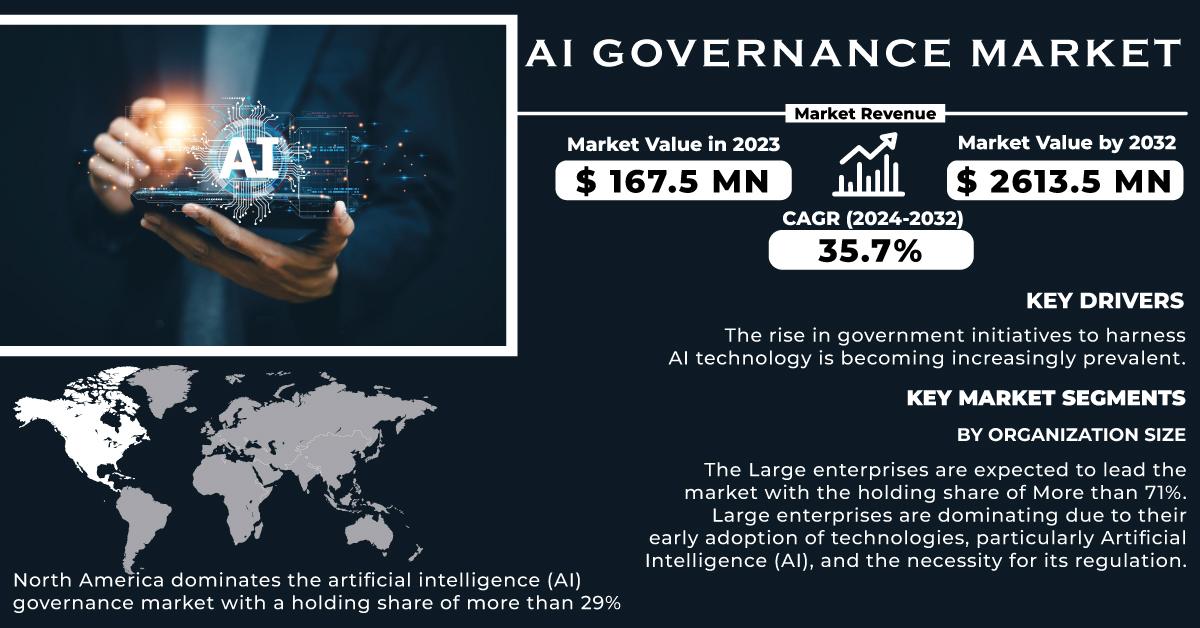

AI Governance Market was valued at USD 160.4 million in 2023 and is expected to reach USD 2761.3 million by 2032, growing at a CAGR of 37.21% from 2024-2032.

Key Drivers of AI Governance

Several factors are driving the increasing need for AI governance. One of the primary drivers is the growing reliance on AI in decision-making across industries. From hiring practices to loan approvals and medical diagnoses, AI algorithms are increasingly influencing critical decisions that were traditionally made by humans. This reliance introduces new risks, such as algorithmic bias or unfair treatment, which may adversely affect individuals or groups. AI governance frameworks are essential to ensure these systems make fair, unbiased, and transparent decisions, ultimately protecting consumers and stakeholders from harm.

Another driver is the increasing pressure from regulators and policymakers to ensure AI systems comply with emerging laws and ethical standards. Governments worldwide are beginning to recognize the importance of AI regulation to protect privacy, promote transparency, and prevent discriminatory practices. The European Union’s AI Act, for example, is one of the first attempts at creating comprehensive AI regulations, and it is influencing other regions to consider similar measures. Organizations that adopt AI governance practices can stay ahead of potential regulatory challenges and demonstrate their commitment to responsible AI deployment.

Challenges in Implementing AI Governance

While the benefits of AI governance are clear, implementing effective frameworks presents several challenges. One major obstacle is the fast-paced nature of AI development. As AI technologies evolve rapidly, keeping governance frameworks up to date becomes increasingly difficult. Traditional governance models may struggle to address the unique complexities of AI, such as the need for real-time monitoring and the lack of transparency in certain algorithms (like deep learning models). This can make it difficult for organizations to ensure compliance and accountability, particularly when AI systems operate in a "black-box" manner, where the reasoning behind decisions is not easily understood.

Additionally, the lack of standardized frameworks and clear guidelines across industries complicates the implementation of AI governance. As AI is used in diverse fields, from finance to healthcare to education, governance frameworks need to be flexible and adaptable. However, the absence of universally accepted standards for best practices makes it challenging for companies to develop governance structures that are both comprehensive and scalable.

The Role of Ethical Considerations in AI Governance

A significant focus of AI governance is ensuring that AI technologies are developed and used in an ethical manner. This includes addressing issues related to fairness, accountability, and transparency. Ethical AI governance emphasizes the need to avoid biases in algorithms that could perpetuate discrimination or inequality. It also stresses the importance of clear and transparent decision-making processes, so users and stakeholders can understand how AI systems arrive at conclusions. By embedding ethical principles into AI development, companies can mitigate the risk of harmful societal impact and build public trust in their AI systems.

Furthermore, ethical AI governance involves promoting inclusivity and diversity in AI design and decision-making processes. This can help ensure that AI systems are developed to serve a broad range of people, rather than reinforcing the biases or perspectives of a narrow group. Organizations that prioritize ethical considerations in AI governance will be better positioned to develop solutions that align with societal values and contribute to positive outcomes for all stakeholders.

The Future of AI Governance

The future of AI governance is closely tied to the rapid advancement of AI technologies and the increasing complexity of their applications. As AI continues to shape industries, governance frameworks will evolve to address emerging concerns such as data ownership, AI accountability, and ethical implications. The rise of autonomous systems, including self-driving cars and AI-powered medical devices, will require even more robust governance structures to ensure safety and accountability.

As AI governance becomes a central focus for organizations and governments alike, the demand for AI governance solutions will continue to rise. By adopting forward-thinking governance practices, organizations can harness the full potential of AI while minimizing its risks. As regulatory landscapes solidify and industry standards emerge, AI governance will play a critical role in shaping the responsible and ethical development of artificial intelligence in the years to come.

In conclusion, AI governance is a crucial element in ensuring that AI technologies are developed and used responsibly. As the demand for AI systems grows, so does the need for comprehensive governance frameworks that address ethical, legal, and operational concerns. By implementing strong AI governance practices, organizations can navigate the challenges of AI deployment while ensuring that these technologies contribute positively to society.

Contact Us:

Akash Anand – Head of Business Development & Strategy

info@snsinsider.com

Phone: +1-415-230-0044 (US) | +91-7798602273 (IND)

About Us

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Read Our Other Reports:

Digital Evidence Management Market Size